Illusions for depth models and illusions for humans

This is the second of two posts (first is here) that accompany our visualization, which is open-sourced.

As explored in our previous post, models that predict depth can be difficult to debug and understand. One way we can start to shed some light on their inner workings it to see when they fail (or do not fail) in interesting ways. In this blogpost, we explore this in two ways.

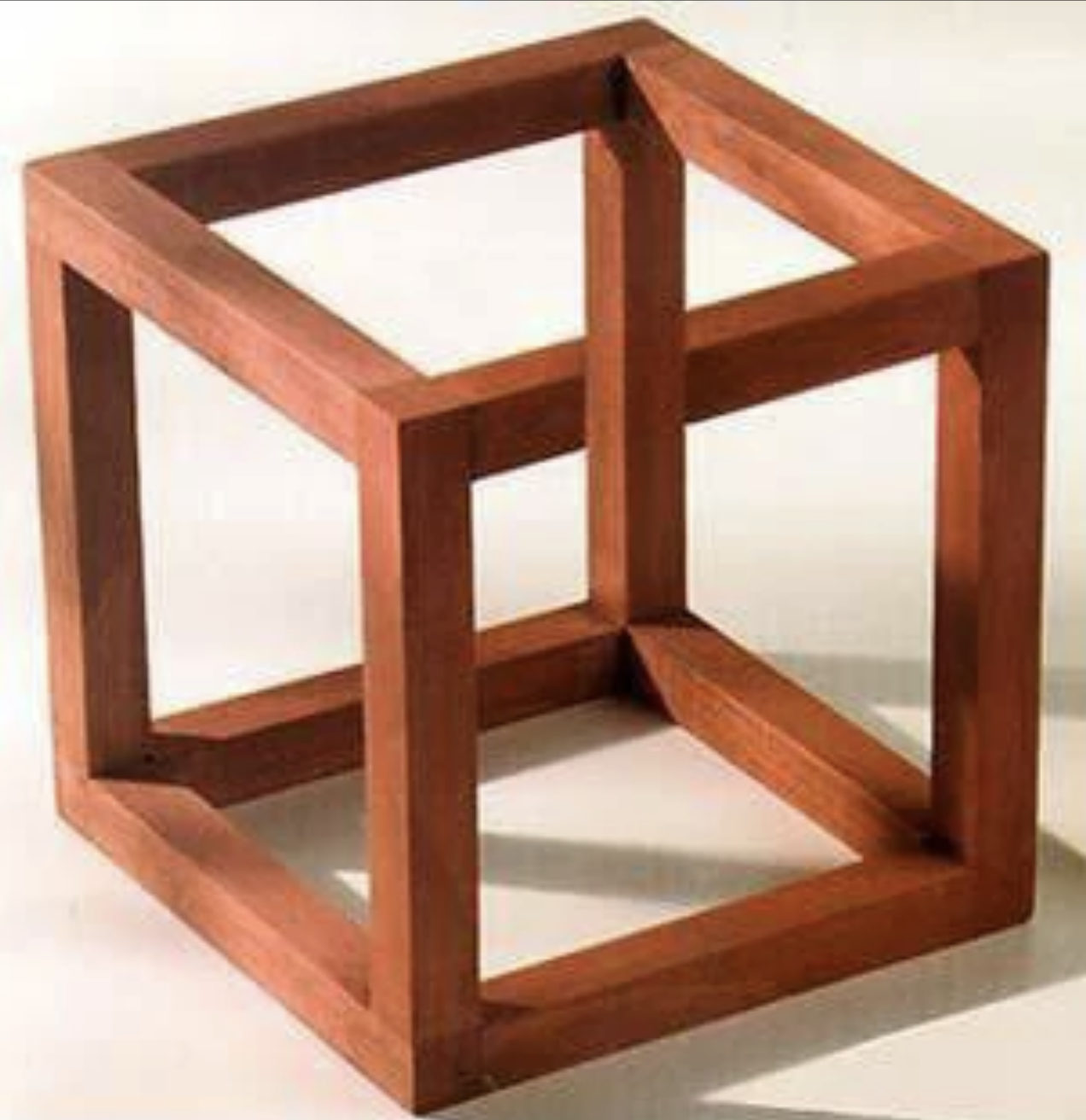

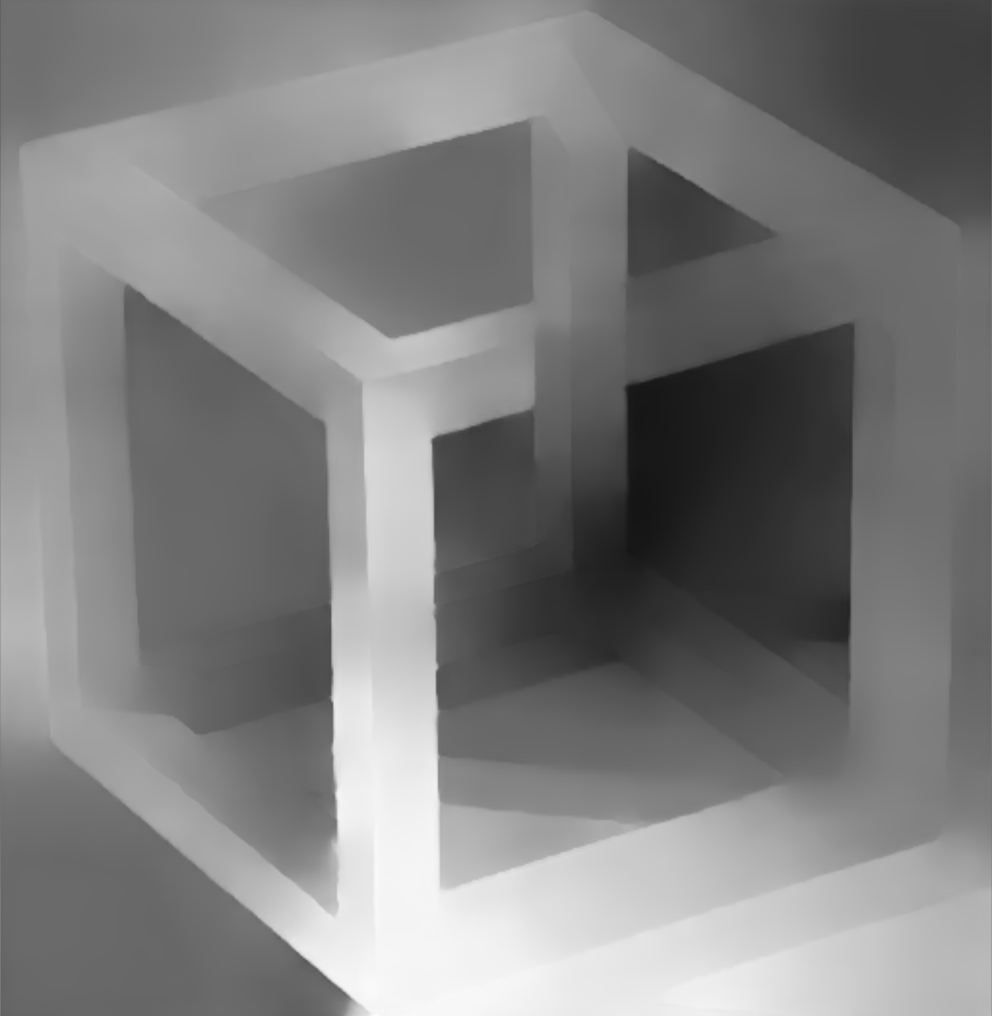

The first is through 3D visual illusions. These images, popularized by M.C. Escher in the 1900s, have fascinated us with their ability to force us into a state of cognitive dissonance. As with the images below, they look locally correct, but are physically impossible-- no true depth map can exist for them. This makes them interesting test cases for depth map models, which are forced to create a global depth map.

We also experiment with a different kind of “designed to confuse” image: programmatically generated adversarial images. These adversarial attack images have been created for many types of image models, and indeed are possible to create for depth map models as well.

Out-of-Distribution Images

Optical Illusions

For these experiments, we use a model similar to this one, which learned to interpret 3D shapes by observing tens of thousands of scenes shot with a moving camera.

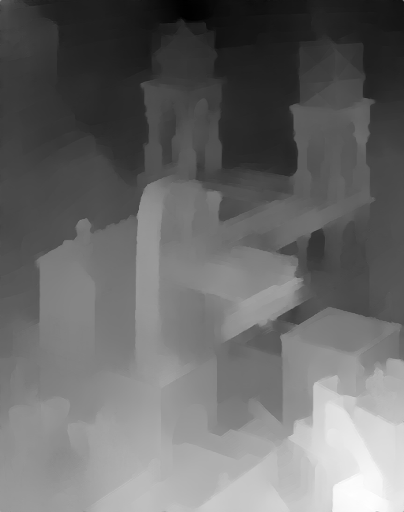

In the context of depth prediction, impossible object illusions are another set of interesting image examples. For example, in the image above, the water is simultaneously flowing away from the wheel along the trough, but also back toward the waterfall, which is directly above the wheel (there are also a few other hidden impossibilities in the picture).

These images purposefully depict physically impossible objects or spaces, so no true depth map can exist. How, then, will the model react when it is forced to make a depth prediction? Will it look at local edges or try to glean some global structure from the image? It’s hard to quantitatively analyze this or make a generalizable conclusion, but from this example of an Escher print, the model does seem to be trying to impose some sort of global structure on the image. That is, the water trough does seem to be interpreted as a single cohesive element which uniformly moves into the background, rather than being bent to satisfy the constraint of the towers. Similarly, in the impossible cube, the model does not try to reconcile the crossing bars by bending them, but instead just sees the front bar as having a conveniently-sized gap where the other crosses it. Again, though, these are just anecdotes-- a further quantifiable analysis would be interesting future work.

Generating Adversarial Examples

In addition to explorations of impossible object illusions, we were also curious about another kind of image that’s outside the model’s training distribution: generated adversarial examples.

We generated adversarial examples for depth models using the standard gradient descent approach (see generation script here). These experiments were done using this open-source DenseDepth model.

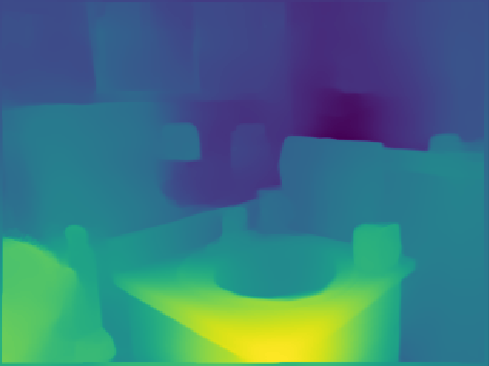

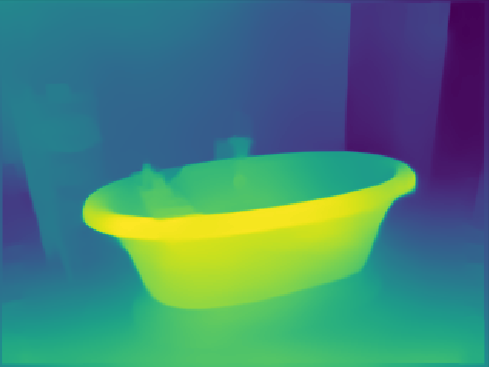

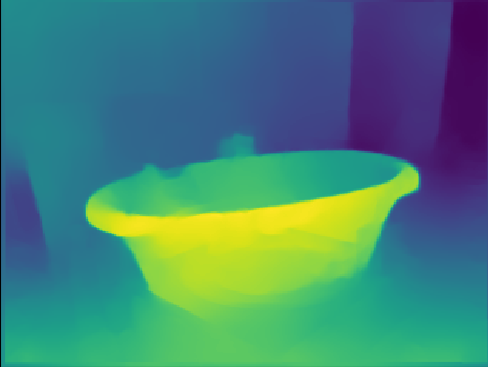

In one example, we started with this image of a bathroom sink (left), which produces the corresponding depth map (middle), and we tried to manipulate the input image so that the output depth map was that of the bathtub (right).

We ran gradient descent, altering the image input to with the loss as the mean-squared error of the difference between the generated depth output and the target depth output. After 250 iterations with a step size of .001, we generated the following:

Indeed, we were able to generate images that look very similar to the human eye, but which produce dramatically different output depth maps. As with all models prone to adversarial attacks, this is concerning, and doubly so here with the frequent use case of depth map models in self-driving cars (examples here and here) and other systems that interact directly with the physical world.

Conclusion

Exploring the failure cases of a model can shed light on its performance, revealing potential vulnerabilities, pathologies, and even explanations for its inner workings. In this blogpost, we explore two types of out-of-distribution images, illusions and manually created adversarial examples, and see how we can learn from the model’s performance on them.

Thanks!

It looks like independently, Jonathan Fly had a similar idea-- if you enjoyed this blogpost, you might like this series of tweets!